A New York Times writer named Farhad Manjoo — who I’m sure is a fantastic person in many rich and varied ways — recently found a way to, under the cover of journalism, snag a sweet, comfortable Cadillac Escalade for a family road trip. No shame there, I’ve done basically the same thing.

There’s a problem, though. The article about this road trip that appeared in the NYT today is absolutely jam-packed with mischaracterizations about GM’s Level 2 semi-automated driver assist system, Super Cruise, and, really, all L2 driver assist systems, that I think the result is genuinely dangerous.

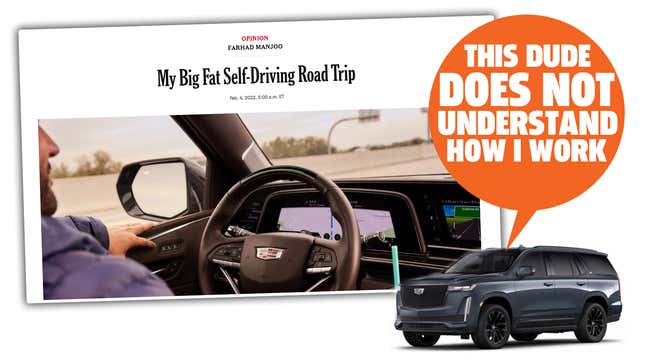

The problems start right from the title of the article, “My Big Fat Self-Driving Road Trip,” which I guess is a reference to those My Big Fat Greek Wedding movies, but I don’t really get why, as there’s nothing Greek or matrimonial or even fat involved here. But that’s not the real problem with the headline; the real problem is the use of the term “self-driving.”

This term is a huge problem because it’s not just wrong, it’s dangerously wrong. That Escalade was very much not a self-driving car. While there were clearly many times when it seemed like a self-driving car, it in no way at all is.

Look, a Furby may seem like its trying to communicate with you, but if you extrapolate that to believe that it’s alive and capable of reciprocating your love, then you’ll be very disappointed. An Escalade with Super Cruise is similar, in that it seems like it’s driving itself at times, but if you extrapolate that to believe it really is, then you could end up in a really bad situation that could leave people dead.

Let’s look at how Manjoo describes the system so I can make clear why it’s such a problem:

To see why I’m so taken with the Escalade, let me tell you about Super Cruise, G.M.’s autonomous driving system, which is among the industry’s most advanced. Many cars now offer some version of driving assistance, but most manufacturers’ self-driving systems, even Tesla’s Autopilot, requires the humans sitting in the driver’s seats to keep their hands on the steering wheel while the car is piloting itself. Super Cruise dispenses with the wheel touching.

Okay, first, we have the “self-driving” issue again here. This is a term that suggests the car itself is the entity responsible for driving, which it absolutely is not. The human is. The human always is for Level 2 systems, and whether or not hands are required on the wheel doesn’t matter one bit.

There’s also some really dangerous mischaracterizations going on here:

After engaging the system, you can twiddle your toes and put your hands in the air like you just don’t care. The car will steer, stay centered in a lane and adjust its speed to keep pace with the traffic around you (up to a set maximum speed). When you tap the turn signal, it will search for a safe spot and change lanes. Sometimes the car encounters a problem (for instance, the road’s lane lines are too faint for it to pick out) and it informs you to take over.

See what he says there about toe twiddling and putting one’s hands in the air, as though one didn’t care? That’s bullshit. It’s dangerous bullshit. Every word there implies that once Super Cruise is on, you can stop being alert to what’s going on, and that you only need to let your hands descend back into reality and caring when “the car encounters a problem... and it informs you to take over.”

This is very much not how Level 2 systems are designed to work. These are not systems that can run unattended, and are capable of informing you with sufficient notice when your attention and driving efforts are needed — that’s roughly what a Level 3 system could do, which, again, this is not.

Super Cruise needs you to be fully vigilant of what’s going on at all times. You don’t need hands on the wheel, but you need to be paying attention, constantly, because you may get no warning at all or any request to take over if the car gets confused or makes a poor decision or does anything wrong.

Because, again, it’s not capable of driving itself. That’s precisely why GM has taken so much effort to implement an eye-tracking camera-based driver monitoring system, something more advanced than steering wheel torque/touch sensor-based systems.

Even these camera based systems can be fooled, though, and even worse, even if the intent isn’t to fool, this article explains incredibly well why camera systems can’t save Level 2 system problems because, well, here you can read for yourself (emphasis mine):

All the car asks is that you keep looking forward, in the general direction of the road. A small camera mounted on the steering column watches you to make sure. If you look away for more than a few seconds, or cover or block your eyes while eating, reading, texting and the like, the car will issue a series of warnings for you to pay attention. If you fail to heed the warnings, the car will eventually disengage Super Cruise and begin to slow down. Ignoring it isn’t easy; there are angry red lights, a buzzer in your seat, a stern voice scolding you, and I, for one, was quick to obey.

Oh, boy. I suspect that the author thought he was saying the right things here, but what he did say reveals something extremely important about all Level 2 systems—Tesla’s, GM’s, Ford’s, Volvo’s, whoever’s: they’re garbage.

They’re garbage not because of the tech, but because we’re garbage. Well, I mean we’re garbage at the sort of vigilant tasks that Level 2 systems demand of us — humanity has done fantastic things like pimento cheese sandwiches and the works of Alexander Calder and the music of the Pixies, but when it comes to paying fucking attention while a machine does most of the work, we’re garbage.

Look what Manjoo says there: “All the car asks is that you keep looking forward, in the general direction of the road.” That is very, very much not what “all the car asks.” It’s not asking you to look in the “general direction” of the road, out that big window above the radio, it’s asking you to pay fucking attention.

The fact that this intelligent NYT reporter somehow missed this incredibly crucial bit of information is really, really telling of the poor state of understanding of semi-automated driving systems.

I’m sorry to say the author continues talking about this:

What does one do in the driver’s seat, if not drive? It’s basically like being a passenger. For dozens of miles at a time, the car asks nothing from you. Freed from the drudgery of driving, you can let your eye wander across the scenery and your mind contemplate the mundane and the profound. It’s not that you’re completely distracted — even lost in thought, you can keep situational awareness of the road ahead — but the reduction in stress is significant.

What? No. No, man, no. It’s not “basically like being a passenger.” You are still responsible for driving the car. The mentality that you can turn on one of these systems and then start feeling “like a passenger” is exactly how wrecks happen.

I’m also going to argue that being “lost in thought” does not mean you can “keep situational awareness of the road ahead,” at least not reliably. When you’re driving, relying on years of experience and muscle memory, sure, you can be thinking about all sorts of things, but, crucially, you are still very much engaged in the task of driving. It is not the same as sitting in the driver’s seat in a happy reverie, facing forward enough, with glassy, unfocused eyes in line with that monitoring camera.

That is not remaining aware and responsible of your vehicle, which Super Cruise or Autopilot or any L2 system will in no way get you out of doing.

Level 2 semi-automated systems are not compatible with how humans work, and if this article has any positive outcome, it’s proving just that. When Manjoo says

“What all of this amounts to, after a while, is an inflated sense of confidence, a feeling that your car can take you anywhere more or less hassle-free.”

...he’s not praising the car, as he thinks he is, he’s actually damning all L2 systems, because this over-confidence is at the root of the problems and crashes these systems can be involved in.

Granted, GM’s system is geofenced, and only operates on pre-mapped highway stretches, as opposed to Tesla’s Autopilot or Full Self-Driving (FSD) Beta systems, that have no such restrictions, so the places where one could over-trust Super Cruise are more limited. But that barely helps.

Level 2 systems, by their very design, need an alert driver, ready to take over without warning. That means whomever is moistening that driver’s seat needs to pay the same level of attention that they would if they were driving — possibly more, because, not being in full control of the vehicle, you’re actually monitoring the actions of the car as well as the surrounding environment.

If it feels a lot easier than regular driving, the sad truth is that you’re probably not paying enough attention. And, while you’ll likely be fine for the most part, all it takes is one tiny anomaly for the car’s systems to misunderstand, or a sensor to get dirty, or the pavement markings getting illegible or the sun shining at the wrong angle, or any number of other unpredictable things, and the car will need you to react and take over, with no warning or alert guaranteed.

This article relays the experience of someone who was fooled by a piece of technology, and the experience described is one of someone who didn’t fully comprehend the tool they were using, and, as a result, the description is inherently flawed.

During every moment of Manjoo’s vacation, he himself was responsible and ultimately in control of that Escalade. The fact that he did not seem to realize that is alarming, and a reminder that the way the mainstream public understands semi-automated driver assist systems is deeply flawed, and those flaws, left unchecked, will lead to crashes and all of the misery that comes with that.

I’m glad you enjoyed your vacation in that fancy, screen-filled SUV, Farhad. I’m just sorry nobody explained to you the reality of it all before you wrote this.

UPDATE: It looks like the NYT got spooked enough about the fundamental misunderstandings in the story that they changed the headline and art:

This may be literally the least they could do; take out self-driving references in the hed for a more advertiser-fellating headline, and swap out a photo for some nice nose-free cartoon people, where the driver is absolutely not in an ideal position to take over control of that car.

The rest of the piece seems the same, so it’s still deeply flawed. Way to phone it in, NYT!