Respected Automated Driving Expert Gives Tesla FSD Beta An "F"

An evaluation from someone with this level of expertise is worth listening to, and some interesting points are brought up

Brad Templeton has been working with computers since way way back in the day (dude helped port VisiCalc to the Commodore Pet in 1979, for example) and has been involved with automated vehicle development since 2007, writing extensively about them, and then joining Google's team (that later became Waymo) in 2010.

Templeton has been in the driving automation space for years, and I think he's someone with a perspective worth listening to when it comes to automated driving. He recently became one of The Chosen to get Tesla's FSD Beta software to try, and he found it "terrible. I mean really bad." He explains his reasoning in a video with examples, so, you know, if you're filling up with rage, maybe just take a moment to watch it.

Templeton used FSD Beta version 10.8 on his 2018 Model 3, and conducted his tests on a relatively unchallenging 3.5 mile loop around Cupertino, California, near Apple's headquarters. This is significant in that this area is not far away from native Tesla lands, in conditions the software should presumably be very used to, and weather, light, and visibility were all pretty close to optimal.

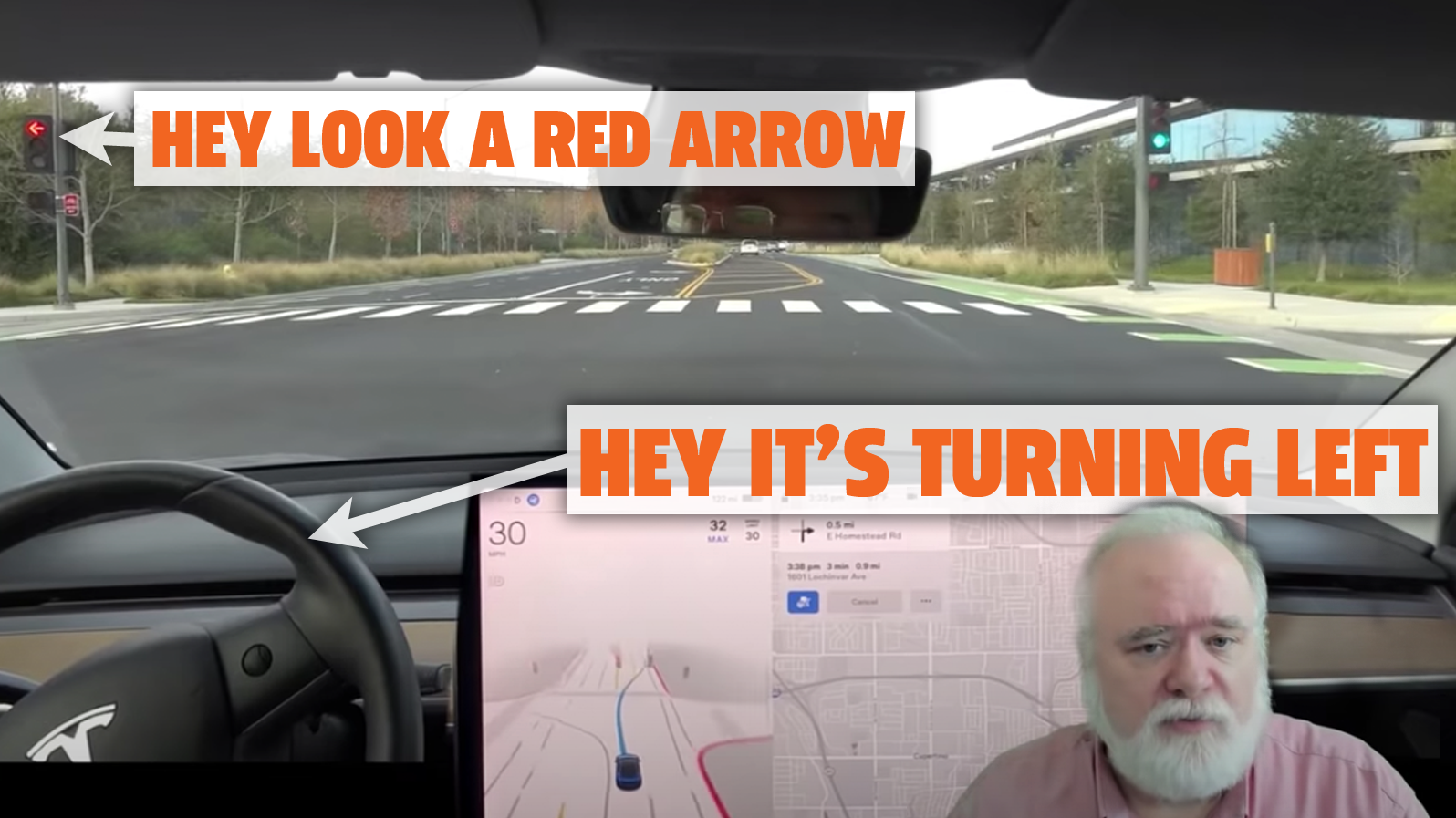

Despite all this, FSD Beta still drove the car jerkily, made confusing and sometimes downright incorrect decisions, inconvenienced other drivers, and, at one point, even attempted to make a left turn on a red light.

Here, just watch:

Templeton justifies his harsh grade by relating it to a human taking a driving test:

To those who feel an "F" is too harsh, let me know where you can take a driving test and run 2 red lights, block the road crosswalk for long periods in multiple intersections while people honk at you and the tester has to grab the wheel twice to stop you from hitting things, and 3 times make random turns the tester didn't tell you to make — and still not get an "F" on the test. In fact, the test would be stopped at the first one of most of these.

He also categorized a number of issues noted during the drive:

1. Yielding too long at a 3 way stop, even though it was clearly there first (D+)

2. Veering towards a trailer on the side of a quiet street (F)

3. Being very slow turning onto an arterial and getting honked at (D)

4. Pointlessly changing lanes for a very short time

5. Failing in many ways at a right turn to a major street that has its own protected lane, almost always freezing and not knowing what to do (F)

6. Jerky accelerations and turns (D)

7. Stalling for long times at right turns on red lights (F)

8. Suddenly veering off-course into a left turn that's not on the route, then trying to take that turn even though the light is red! (F, and ends test immediately)

9. Finding itself in a "must turn left" lane and driving straight out of it, or veering left into oncoming traffic (F, and ends test immediately)

10. Handing a basic right turn with great uncertainty, parking itself in the bike lane for a long period to judge oncoming traffic (F)

11. Taking an unprotected left with a narrow margin, and doing it so slowly that the oncoming driver has to brake hard. (Possible F)

Because of his extensive experience in the field, Templeton was able to make pretty salient assessments about some things that he felt Tesla is doing wrong here, including Tesla's decision to eschew detailed maps that could have prevented a lot of confusion. Tesla's system maps on the fly as the car drives, which is certainly impressive, but problems could be avoided if the system made use of existing maps to help understand the roads.

There's also perception issues, caused in part by Tesla's wholesale reliance on cameras, and rejecting radar or lidar or other sensing technologies. Templeton outlines more issues, including the planning methods, how the system forecasts, and more.

What I found most interesting was a point Templeton made about why the FSD system is so stressful to use, precisely the opposite of what the whole point of such a system is. Templeton maintains that Tesla's Autopilot, which is much more clearly a driver-assist system, is far more relaxing, because the role of the driver is more clearly understood and defined.

The FSD system, with its goal of becoming, as its name suggests, full self-driving, doesn't have this clarity of role, and as a result puts the driver in a position where they may be surprised by the car's choices, over and over.

Surprise isn't what you want from a self-driving system. When you're driving on your own, you're not surprised because you are actively making all of the driving decisions, so you know what your plan is. When the car is attempting to do it, you're really out of the loop, and forced to be ready to react to the car's choices, which you don't really know ahead of time.

Sure, there's the visualizer with the little path tentacles, but those are often jittery and constantly shifting and changing. It's not relaxing at all, not by a long shot. It's stressful, because you're both responsible and simultaneously not fully in control, and that's a terrible place to be.

There's lots of things to be impressed about with FSD, but that does not mean it's even close to ready. And, sure, it's clearly called a Beta, but, really is it even that? If new sections of code are being re-written and deployed out, that's not exactly beta-level. Plus, should unfinished software that drives a 4,000 pound car really be deployed to the public without actually-trained safety drivers?

None of this is easy, and seeing an expert's honest and frank assessment of this most-commonly deployed automated driving system is important.

I'm sure there will be lots of claims that Templeton has competing interests or stands to personally gain by Tesla's failure and all the other usual litanies of accusations Elon's Children fling at critics. But none of that changes the simple fact that the system still has a long, long way to go, and that criticism—even the harsh sting of an F grade—is how things get made better.

So, you know, suck it up.