In early 2016, 48-year-old Gao Jubin had high hopes for the future, with plans to ease up on running a logistics company and eventually turn over control of the business to his son, Gao Yaning. But his plans were abruptly altered when Yaning died at the wheel of a Tesla Model S that crashed on a highway in China while the car—Jubin believes—was traveling in Autopilot, the name for Tesla’s suite of driving aids. Now, Jubin says, “Living is worse than death.”

The last time Jubin saw Yaning, it was Jan. 20, 2016, days before the Chinese New Year. Jubin’s family had just left a wedding reception, and his son was in high spirits. The ceremony was for his girlfriend’s brother, so he’d spent the day keeping busy and helping out when needed.

Given that the wedding involved his potential future-in laws, Chinese tradition meant Yaning should enthusiastically help out with planning the ceremony, Jubin told Jalopnik this month, and he did. “He did a lot of logistics of the wedding, preparing transport and accommodating the guests,” Jubin said.

Following the ceremony, Yaning arranged transportation for his parents to get home. He took Jubin’s Tesla Model S and went to meet some friends. Before departing, Jubin said, his son offered up a word of caution: “Be careful driving.”

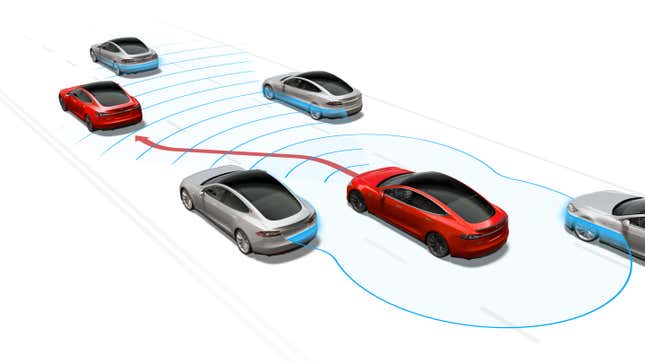

In retrospect, it was an omen. On Yaning’s way home, the Model S crashed into the back of a road-sweeping truck while traveling on a highway in the northeastern province of Hebei. At the time, his family believes, the car was driving in Autopilot, which allows those cars to automatically change lanes after the driver signals, manage speed on roads and brake to avoid collisions.

Yaning, 23, died shortly after the crash. Local police reported there was no evidence the car’s brakes were applied.

The crash in China came at a precarious time for Tesla. In the summer of 2016, the automaker and its semi-autonomous system were facing intense scrutiny after it revealed a Florida driver had died earlier that year in a crash involving a Model S cruising in Autopilot. An investigation by U.S. auto regulators was already underway.

But no one knew about Yaning’s death—which happened months before the Florida crash—until that September, when Jubin first went public about a lawsuit he’d filed against Tesla over the crash. Police had concluded Yaning was to blame for the crash, but Jubin’s suit accused Tesla and its sales staff of exaggerating Autopilot’s capabilities.

He asked a local court in Beijing to authorize an independent investigation to officially conclude that Autopilot was engaged. The suit sought an apology from Tesla for how it promoted the feature.

Multiple U.S. news outlets covered Jubin’s case after Reuters wrote about the suit in 2016, but interest almost immediately faded. The case, however, is still ongoing, according to Jubin, who spoke with Jalopnik this month by Skype in his first interview with a U.S. media outlet.

He hopes the suit will bring more attention to the system’s limited capabilities and force Tesla to change the way it deploys the technology before it’s refined. (A federal lawsuit filed last year in the U.S. echoed that concern, alleging the automaker uses drivers as “beta testers of half-baked software that renders Tesla vehicles dangerous.” Tesla called the suit “inaccurate” and said it was a “disingenuous attempt to secure attorney’s fees posing as a legitimate legal action.”)

In September 2016, the company said the extensive damage made it impossible to determine whether Autopilot was engaged. Following the incident, Tesla updated the system so that if a driver ignores repeated warnings to resume control of the vehicle, they would be locked out from using Autopilot during the rest of the trip.

Even if the system was engaged during the collision, Tesla told the Wall Street Journal, Autopilot warns drivers to keep their hand on the steering wheel, which is reinforced by repeated warnings to “take over at any time.” Yaning, Tesla said, took no action even though the road sweeper “was visible for nearly 20 seconds.”

A Tesla spokesperson said in a statement to Jalopnik that “We were deeply saddened to learn that the driver of the Model S lost his life in the incident.” A police investigation, the spokesperson said, found the “main cause of the traffic accident” was Yaning’s failure “to drive safely in accordance with operation rules,” while the secondary cause was the street-sweepers had “incomplete safety facilities.”

“Since then, Tesla has been cooperating with an ongoing civil case into the incident, through which the court has required that a third-party appraiser review the data from the vehicle,” the statement said. “While the third-party appraisal is not yet complete, we have no reason to believe that Autopilot on this vehicle ever functioned other than as designed.”

Within the first year of introducing Autopilot, in October 2015, Tesla faced intense criticism for labeling the feature as such. Regulators across the globe expressed concern that the name is misleading. Tesla has always maintained that Autopilot is only a driving aid—not a replacement—and that motorists must pay attention to the road while it’s engaged.

But Tesla’s Autopilot messaging has, at times, been criticized as conflicting and ultimately confusing. And Tesla drivers continue to push the system to its limits, with some producing videos that show Autopilot being used in ways the automaker wouldn’t officially endorse.

In the months following the crash, concerns over Autopilot seemed to dissipate, as carmakers ratcheted up efforts to develop semi-autonomous driving aids of their own that could challenge Tesla. Autonomy, the auto industry’s way of thinking went, was the way of the future.

The National Highway Traffic Safety Administration, in early 2017, also gave Tesla some peace of mind, after it cleared the automaker and Autopilot in the fatal Florida crash. But the U.S. National Transportation Safety Board later pinned blame partially on Autopilot and an over-reliance by the motorist on Tesla’s driving aids. (The NTSB can only make safety recommendations, while NHTSA’s authorized to order recalls or issue fines.)

In the Florida crash, NTSB Chairman Robert Sumwalt said, “Tesla’s system worked as designed. But it was designed to perform limited tasks in a limited range of environments. The system gave far too much leeway to the driver to divert his attention to something other than driving.”

There are few known crashes involving Tesla drivers that had Autopilot engaged, but given how relatively new autonomous technology is for commercially available cars, regulators have taken interest in how they function, even for minor accidents. The legal system has yet to seriously weigh in. A federal lawsuit over Tesla’s Autopilot rollout is pending; the first case over an accident involving an autonomous car—filed by a motorcyclist against General Motors—emerged only in January.

Tesla previously said that “any vehicle can be misused.”

“For example, no car prevents someone from driving at very high speeds on roads where that is not appropriate or from using cruise control on city streets,” the automaker said. “In contrast, Tesla has taken many steps to prevent a driver from using Autopilot improperly.”

But Tesla owners have continued to post examples of the system being misused, raising concerns that some either don’t understand Autopilot’s limitations, or rely on it far too much.

Those concerns were rekindled after a Tesla driver in January slammed into the back of a parked firetruck on a California freeway while his Model S was reportedly driving in Autopilot.

In response, the NTSB and NHTSA both launched new investigations into the use of Autopilot, highlighting some of the criticisms Jubin first raised after his son’s crash two years ago.

In particular, does Tesla do enough to ensure drivers won’t misuse Autopilot?

Officially, it hasn’t been concluded if Autopilot was engaged at the time of Yaning’s crash. Tesla claimed Jubin’s car’s damage made it physically incapable of transmitting log data, so the company had “no way of knowing whether or not Autopilot was engaged at the time of the crash.”

But Jubin believes he has more than enough evidence—recorded in-car video footage, a report from an expert who examined the clips, anecdotal comments from other Tesla owners—to prove it.

The formal inspection hasn’t been completed, but Jubin’s attorney, Cathrine Guo, told Jalopnik on Tuesday that Tesla’s U.S. headquarters has turned over a document that decodes data produced on a SD memory card installed in the Model S. The document, Guo said by email, recorded the actions of both the car and driver and shows that Autopilot was on at the time of the crash.

When asked for a response, Tesla referred to the spokesperson’s statement, which said: “While the third-party appraisal is not yet complete, we have no reason to believe that Autopilot on this vehicle ever functioned other than as designed.”

In the dashcam clips, the Model S appears to drive smoothly, centered in lanes. “Even when the road was rugged and bumpy,” Jubin said, “it didn’t change course.”

Warning: The following video contains graphic content of the fatal crash itself.

Jubin also spoke with Tesla owners and experts and he said that they agreed that Autopilot must’ve been engaged.

And at an initial court hearing, Jubin’s attorney said the speed of the Model S remained consistent for eight minutes before the collision. Jubin said he found a professor at Beijing Jiaotong University who’s an expert in autonomous driving. After reviewing the video and accompanying documents, Jubin said, “The professor felt that ... Autopilot had to be the cause of the accident.” Jubin’s attorney also said that Yaning hadn’t been drinking that day.

A judge from the Chaoyang District People’s Court of Beijing recently granted Jubin’s request for a third-party inspection to confirm whether Autopilot was engaged, and the probe could begin as early as this month, attorney Guo, said.

“Based on all the information I have,” Jubin said through his attorney, who translated the conversation. “I have no doubt that accident [was] caused by the Autopilot.”

Throughout the nearly two hour interview with Jalopnik, Jubin had to pause several times to compose himself. Yaning was a “very kind, selfless, altruistic” individual, he said.

“When he was a kid, when he was playing with his friends, he always took snacks from home and shared them,” Jubin said. “When some of the kids didn’t get it, he would come back home and get more.”

“He had been very understanding of us parents, helping us feed his little sister, or washing her clothes,” he went on. “Every time the four of us went out, it was like three parents with a kid... it was a really happy, beautiful family, and all of a sudden it was gone, like that.”

Yaning’s family grew up in Handan city, in the Hebei Province of China, where Jubin worked in the coal trade. Eventually, Jubin said, he started a logistics company and took on some public service work.

Jubin said his son spent two years in the army. He returned home and, eventually, applied for a local college to study business administration. The plan, Jubin said, was for him to one day takeover the logistics firm.

“I did not expect everything was in vain,” he said.

Yaning’s last moments alive were captured on a camera that was apparently installed on the dashboard of Model S. The lens looked out through the windshield and onto the road. Jubin’s attorney provided Jalopnik about two-and-a-half minutes of footage that was first published in September 2016 by Chinese state broadcaster CCTV.

The video shows the white sedan cruising along a four-lane highway in relatively clear weather. At one point, Yaning can be heard jubilantly singing, as the car merges from the middle to the left lane.

The car continues along the road, appearing to travel at the same speed. At one point, a car ahead of the Model S moves into the center lane, leaving an orange street sweeper straddling the road’s left shoulder directly in Yaning’s path.

Autopilot is designed to adjust speeds using adaptive cruise control. If, say, an object appears in the car’s path, an escalating series of visual and audio warnings are supposed to go off, signaling for the driver to resume control of the wheel. Based on the video, no warning alert went off and the Model S never slowed before Yaning slammed into the truck.

Tesla vehicles come equipped with automatic emergency braking technology regardless if Autopilot is turned on, which is supposed to alert the driver of a potential hazard. If the driver doesn’t react in time, the car should automatically apply its brakes.

But Tesla’s owner manual states that Autopilot isn’t adept when it comes to recognizing stationary vehicles at high speeds, as Wired notes. “Traffic-Aware Cruise Control cannot detect all objects and may not brake/decelerate for stationary vehicles, especially in situations when you are driving over 50 mph (80 km/h) and a vehicle you are following moves out of your driving path and a stationary vehicle or object is in front of you instead,” according to the manual.

Jubin had been home at the time of the crash. In the interview, Jubin said a Tesla customer service representative called and told him “we detected your airbag exploded.”

“I said, ‘My son was driving today. I don’t know the details, you should hang up and call my son, and find out what is going on,’” Jubin said.

Shortly after, Yaning’s friend who was driving near him at the time of the crash in a separate car called Jubin and said he’d been in a serious accident. Jubin and his wife immediately left and drove to the site of the accident.

When they arrived, Jubin discovered first responders addressing a gruesome scene.

“I got there and saw the car was in pieces, and a lot of blood was streaming down to the ground from the car,” he said. “The blood covered a big area on the ground. We felt very sad. It was a very bad feeling, and my wife [was] praying in my car for my son’s safety. We got to the hospital and the doctor told me they tried and couldn’t save him.”

The intervening months took a toll. He suspended work at the logistics company and let every employee go. Jubin’s now focused solely on the lawsuit and taking care of his family.

“He’s gone and we don’t know how to carry on,” Jubin said. “Living is worse than death.”

Jubin’s main complaint is what he called Tesla’s misleading advertising of Autopilot. Since introducing the system in 2015, the automaker has taken flak from consumer advocates, as videos showed Tesla drivers reading or even sleeping while their cars were in motion.

Jubin said Tesla is to blame for how some customers have perceived the capabilities of Autopilot.

In particular, he pointed to a conversation he had with Yaning after purchasing the Model S. Yaning, he said, explained that a Tesla salesperson told him that Autopilot can virtually handle all driving functions.

“If you are on Autopilot you can just sleep on the highway and leave the car alone; it will know when to brake or turn, and you can listen to music or drink coffee,” Jubin said, summarizing the salesperson’s purported remarks.

This tracks with reporting after Yaning’s death went public. Some of Tesla’s Chinese sales staff, for instance, took their hands off the wheel during Autopilot demonstrations, according to a report from Reuters. (Tesla’s Chinese sales staff were later told to make the limitations of Autopilot clear.)

But Jubin said his son was “misled” by salespeople who oversold Autopilot’s capabilities. It continued even after Yaning’s death, he claimed.

“When I was at a Tesla retail store, they were still advertising, and online too, how you can sleep or drink coffee and everything,” he said.

After Jubin initially filed his suit in July 2016, Tesla removed Autopilot and a Chinese term for “self-driving” from its China website and marketing materials. The phrase zi dong jia shi, means the car can drive itself, the Wall Street Journal reported at the time. Tesla changed that to zi dong fu zhu jia shi, meaning a driver-assist system.

“When you hear autonomous driving, or self-driving, when you hear it described as that, as safe, especially on expressways, it’s totally different from the description of assisted autonomous driving,” said Guo, Jubin’s attorney. “That’s one of the reasons we sued Tesla.”

Automakers are currently testing fully-autonomous cars, but no one in the industry expects them to be available to buy for years to come. Jubin’s supportive of the movement toward autonomy, but he urged drivers around the world to be cautious and fully understand their limitations.

“I hope more Tesla owners become aware of it,” he added, “and avoid accidents like this.”

The suit initially asked for about $2,000 in compensation for the family’s grief over Yaning’s death. But the complaint has since been amended, and now asks for 5 million yuan (roughly $750,000). If he prevails, Jubin said, he plans to use some of the money to start a charity fund “to warn more Tesla owners not to use Autopilot.”

“We hope there would be no more tragic families like ours,” he said.

Jubin believes the industry’s technological advancement toward fully-autonomous driving is certain, but he feels Autopilot is too premature for release.

“Tesla should release the feature after it’s fully developed,” Jubin said, “not in the process of perfecting it.”

UPDATE: In a response to this story sent after publishing, a Tesla spokesperson said that the driver’s father told Tesla personnel that his son knew Autopilot very well and had read the owner’s manual for Model S “over and over again.”

Furthermore, the automaker asked Jalopnik to note that the car warns Autosteer is in Beta, requires hands on steering wheel, should not be used on roads with sharp turns and questionable lane markings, and refers drivers to the manual, which additionally states the driver is responsible for minding the system.