Elon Musk Didn't Realize How Hard Self-Driving Would Be Which Is Why He Should Read This Paper

Computers are great at lots of things, but generalizing isn't one of them. And that's very important if we want to let them drive us around.

Very recently, Elon Musk admitted something on Twitter that I believe has been obvious to many people for quite a while: making self-driving cars is really, really hard. Sure, Elon has flirted with this realization before, but has tended to come to dismissive conclusions about the reality of, um, reality, like when he said Tesla was close to full autonomy, save for "many small problems." The full scale of those "small problems" seems to be hitting Elon now, which is why I think he should read this short yet incredibly insightful paper that breaks down one of self-driving's most persistent hurdles.

Before we go into that, let's just look at Elon's tweet here, in response to a Tesla owner that was poking a bit of fun at the perpetual two-weeks-outitude of Tesla's Full Self-Driving (it's not actually self-driving, it's just a Level 2 semi-automated system but whatever) Beta version 9.0:

Haha, FSD 9 beta is shipping soon, I swear!

Generalized self-driving is a hard problem, as it requires solving a large part of real-world AI. Didn't expect it to be so hard, but the difficulty is obvious in retrospect.

Nothing has more degrees of freedom than reality.

— Elon Musk (@elonmusk) July 3, 2021

In case someone spills a whole can of Monster Energy on Twitter's lone server, here's the text of Musk's Tweet:

Haha, FSD 9 beta is shipping soon, I swear!

Generalized self-driving is a hard problem, as it requires solving a large part of real-world AI. Didn't expect it to be so hard, but the difficulty is obvious in retrospect.

Nothing has more degrees of freedom than reality.

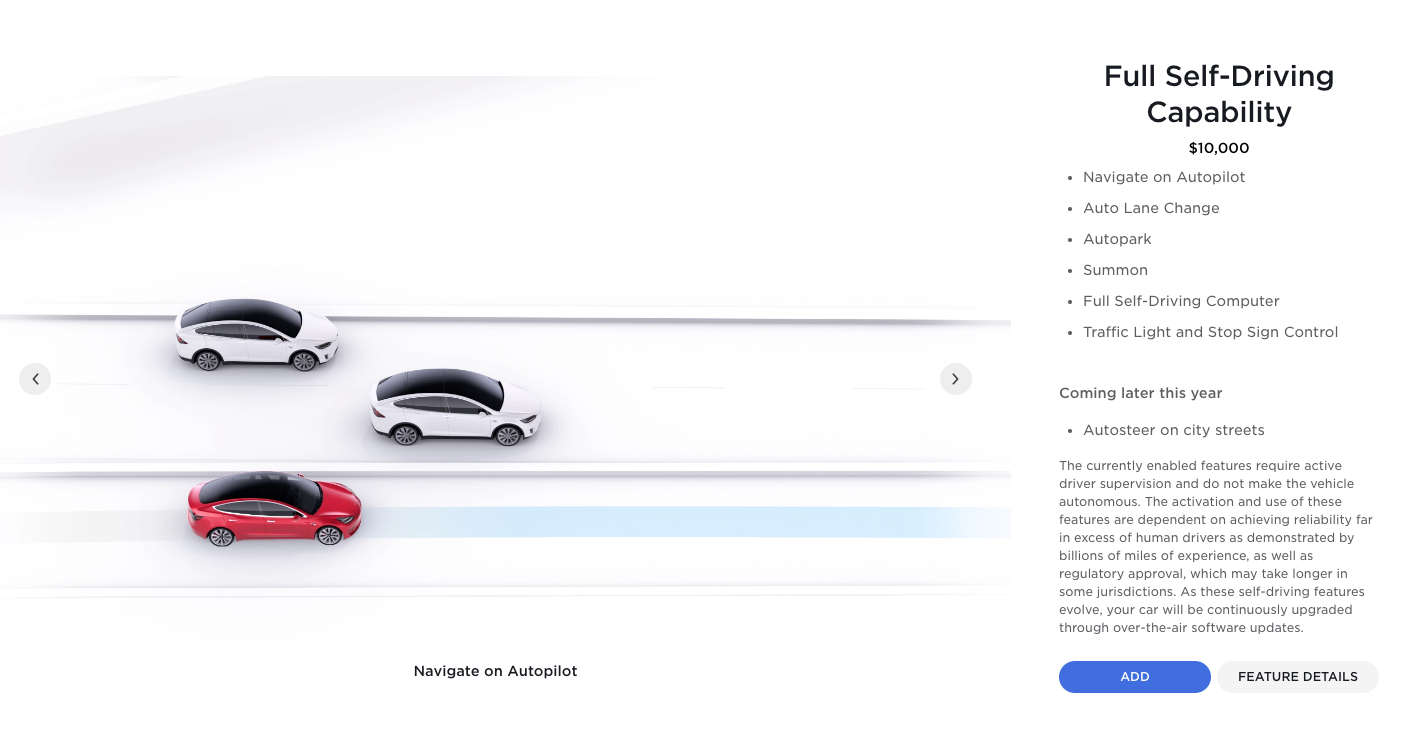

I'm really pretty baffled why he "didn't expect it to be so hard," but I guess that tracks considering the company has already been selling subscriptions for this as-yet still unfinished bit of software for just $10,000 otherwise useless dollar bills.

Now, rabid Tesla fans are already claiming that FSD is safer than human driving and point to the innate processing speed advantages of computers over humans' squishy, dripping brains, as well as Tesla-sourced statistics. And yet, despite this, we're still seeing some awful (and in this case, fatal) Autopilot-related crashes like the one that took the life of a 15-year-old boy in August of 2019.

A fatal accident occurred when a @Tesla on Autopilot rear-ended a Ford truck. The front video from the Model 3 shows what happened in the final 5 seconds before impact. https://t.co/UM5JaJgdAh pic.twitter.com/bjJCZiSYa1

— Neal Boudette (@nealboudette) July 5, 2021

This particular crash happened when the Tesla on Autopilot somehow didn't see the pickup truck that was changing lanes—with a blinking turn indicator and everything—in front of it and rear-ended the truck, causing the crash. I point it out because it's a type of situation that is inherently difficult for an AI to deal with, because it involves perceiving intent.

This is something human drivers deal with all the time, and are remarkably good at, partially as a side effect of being social mammals for the past few million years. It's also the sort of thing that's very well explained in the paper I mentioned earlier.

That paper is Rethinking the maturity of artificial intelligence in safety-critical settings and it's written by M.L. Cummings, the director of Duke University's Humans and Autonomy Lab.

The key to both Elon's tweet and this paper is a word used in both: generalize. Okay, Elon said "generalized" but still. In this context, what that word is referring to is the process by which an algorithm could potentially "adapt to conditions outside a narrow set of assumptions."

In the case of autonomous driving and computer vision, this could be something like where a stop sign—something the AI's neural nets have been trained to recognize and execute a series of actions in response to—is partially obscured by foliage, or exists in a context outside that of an actual traffic sign, like on a billboard or T-shirt or something.

We've seen Teslas get confused by this type of thing before, situations that would not confuse human drivers: we understand that a stop sign on a billboard isn't intended for us to stop at, and we understand the reality of plant growth and stop signs and can quickly identify the sign even when significantly obscured.

That is to say humans are good at generalizing conditions, and can adapt to situations that don't fit neatly into set categories, even in novel situations we have never encountered before.

The paper describes the human sort of generalized understanding as "top-down" reasoning, and the more brute-force, sensor-focused type of reasoning that computers are good at as "bottom-up" reasoning.

Here's how the paper explains it:

A fundamental issue with machine learning algorithm brittleness is the notion of bottom-up versus top-down reasoning, which is a basic cognitive science construct. It is theorized that when humans process information about the world around them, they use two basic approaches to making sense of the world: bottom-up and top-down reasoning. Bottom-up reasoning occurs when information is taken in at the sensor level, i.e., the eyes, the ears, the sense of touch, etc., to build a model of the world we are in. Top-down reasoning occurs when perception is driven by cognition expectations. These two forms of reasoning are not mutually exclusive as we use our sensors to gather information about the world, but often apply top-down reasoning to fill in the gap for information that may not be known.

AI-based driving is referred to as being "brittle" in the paper because, like brittle things, it breaks easily. When situations don't conform to expected rules (snow or graffiti covering road lines, sunlight blinding cameras, etc) the systems don't have any real way to compensate, while humans instinctively and almost instantly switch to top-down reasoning, which lets us infer information based on experiences and our own enculturation and immersion in life in reality.

Now, I know the usual response is that fixing these issues is just a matter of more training, giving the neural nets more experiences to process these "edge cases" so the algorithm can "know" that particular condition.

In fact, just this argument was made on the Twitter feed about the August 2019 crash:

Really, I think this response shows more about the inherent problems with this approach, since the "edge cases" being mentioned here are sunrise or sunset, which happen literally on a daily basis, making me question if those should count as "edge cases" at all.

There are other issues with just force-feeding an algorithm more examples, as mentioned in the paper:

For example, to fix the vegetation-obscuring-a-sign problem, many engineers will say "We just need more examples to train the algorithm to correctly recognize this condition." While that is one answer, it begs the questions as to how much of this finger-in-the-dyke engineering is practical or even possible? Every time a new sensor is created (like a new LIDAR (Light Detection And Ranging) sensor and every time this sensor experiences a new set of conditions it has not yet seen, it has to be trained with a significant amount of data that may have to be collected. The workload to do this is extremely high, which is one reason why there is such a talent drain caused by the current driverless car space race. All this intense effort that has a significant cost is occurring for systems with significant vulnerabilities.

The whole thing about reality and edge cases is that it's inherently unpredictable, and the number of variables available in reality is, well, literally just about everything.

There's no way to train for every single possible variation on every single situation, but you don't need to if you want to drive—as long as you have the ability to handle top-down reasoning. Which, currently, no autonomous AI system does.

Now, I want to be clear that I am not saying that Level 5 or something approaching Level 5 autonomy is impossible, but I do think the idea that independent cars running local software on local sensors alone (well, plus GPS) will be able to accomplish it isn't practical, or a worthwhile path to pursue.

We need to figure out some way to improve the generalization skills of self-driving vehicles, and to do that we may need to attempt to synthesize top-down reasoning. Maybe that includes robust car-to-car and car-to-some assist system communications, maybe it means we only bother with autonomy in specific contexts, like parts of town with miserable stop-and-go traffic and long highway trips.

This would mean staying limited to Level 4 autonomy, but maybe that's fine? If we can provide a robust network of L4-safe roads that are monitored and maintained to eliminate situations that would even need top-down reasoning, that could get most of what people want from autonomous travel, and they can drive their damn selves in the more complex (for computers but, importantly, not people) situations.

I mean, if you can sleep while you're stuck for two hours in traffic on the 405 or watch movies or work on a 6-hour highway trip, isn't that good enough, even if it means taking over to drive through your neighborhood to get back home?

I know computers are better at math, are faster, can take inputs from many more sensors and more kinds of sensors than I can. I know they don't get tired or horny or distracted or hungry or have to pee. All of this is true, which is why they can be great at bottom-up, sensor-and-rule-based situations.

But they're also absolute shit at generalizing, which we're great at.

This paper isn't terribly long, but it managed to quantify and explain concepts I've been clumsily dancing around the edges of for years. I suggest you give it a read, and by you, I mean Elon, too.

I'll let him come to his own conclusions, of course, like I have any choice otherwise. I'd also suggest anyone considering paying $10,000 for a still unfinished system that the company's CEO is just now admitting is harder to pull off than originally thought should maybe also give it a read, too.

Preferably before you click that "buy" button.