Amazon's AI Driver Attention Cameras Are Denying Drivers Bonuses

AI meant to detect unsafe driving is penalizing delivery drivers for normal, safe driving.

How far do you have to turn your head, in your own car, to see your side-view mirrors? A few degrees? It's probably enough that your nose isn't perfectly aligned with the front of the car. To Netradyne's Driveri safety system, now installed in Amazon Prime delivery vehicles, that's enough to mark down as a demerit.

Amazon has long had apps that track a driver's speed, location, and driving habits, but earlier this year the company began installing the Driveri monitoring system in its Prime delivery vans. Now, drivers have told Vice's Motherboard that they're being penalized for glancing at mirrors, changing the radio station, or being cut off by other drivers.

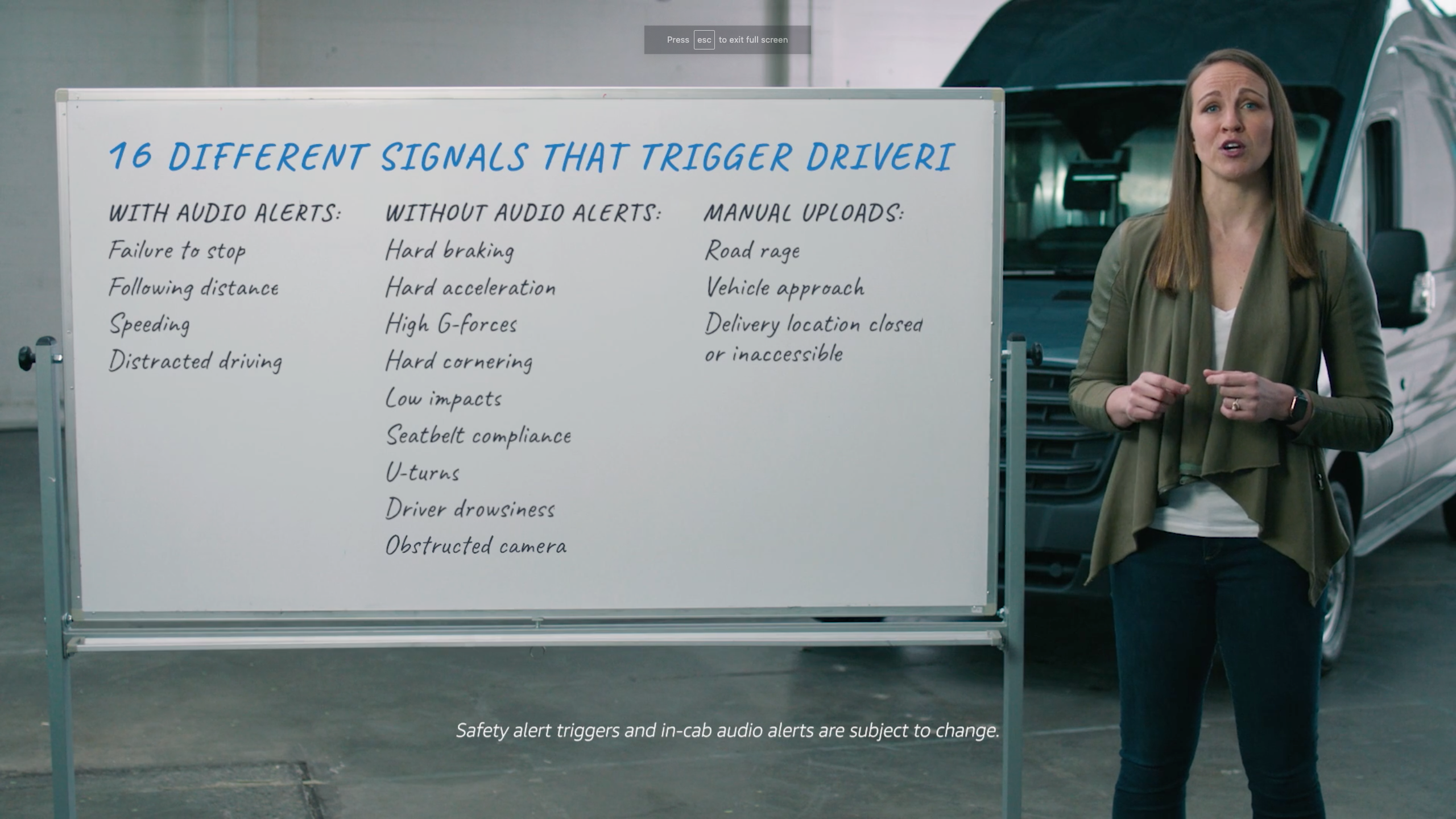

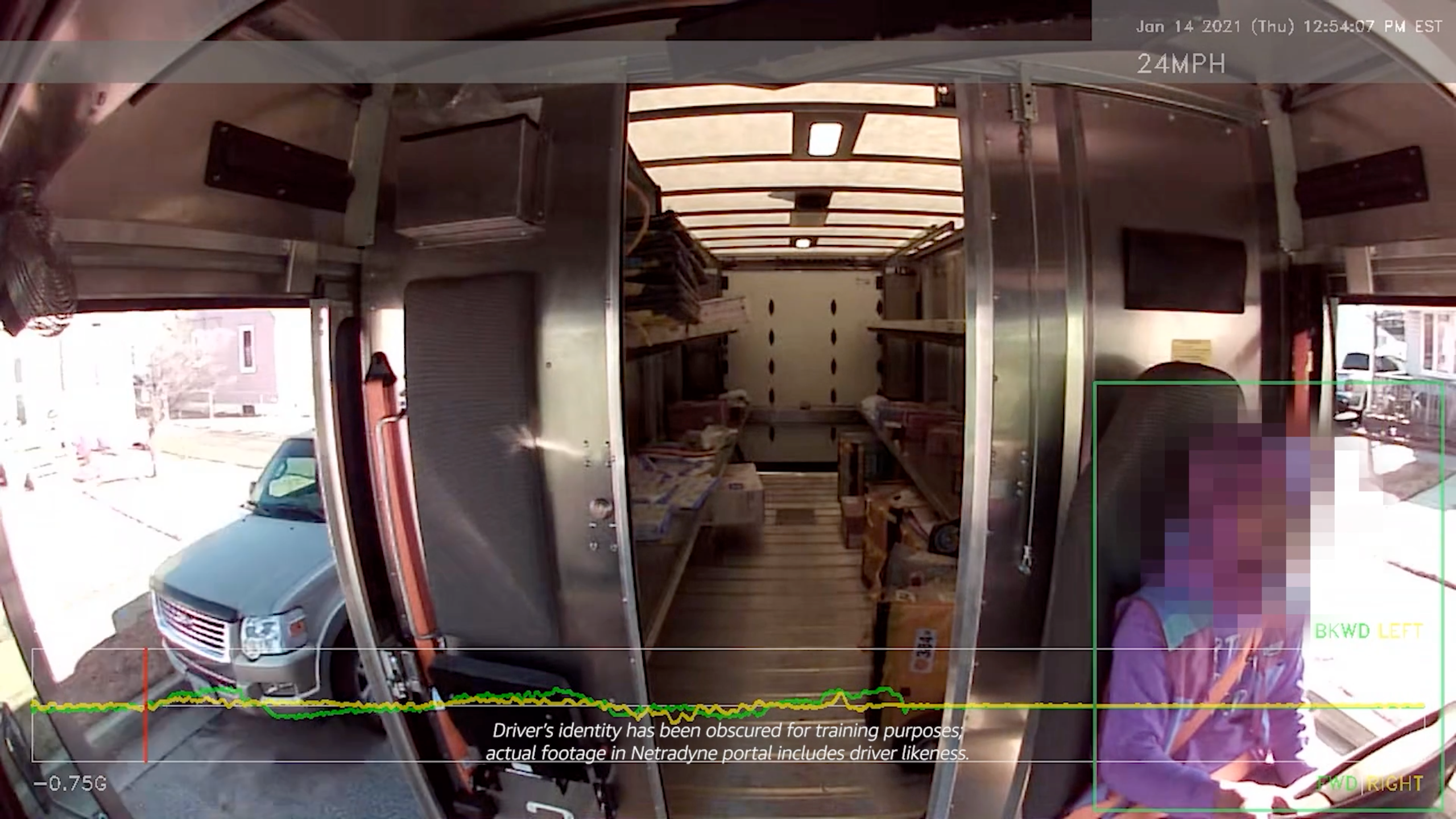

Driveri uses a system of two to four cameras, depending on the model installed, to track interior and exterior views of the vehicle. The system's AI-driven brain uses these video feeds to analyze the road around a vehicle, and record any unsafe driving incidents. According to a Vimeo video hosted by Karolina Haraldsdottir, Senior Manager for Last Mile Safety at Amazon, the company is tracking sixteen specific metrics.

When the system detects unsafe driving, it uploads a clip from the camera to a cloud portal visible to Amazon. In some cases, this is accompanied by a verbal warning from the device's speakers. Drivers, however, claim that the threshold for unsafe driving activity is far too low. From Motherboard:

Netradyne cameras regularly punish drivers for so-called "events" that are beyond their control or don't constitute unsafe driving. The cameras will punish them for looking at a side mirror or fiddling with the radio, stopping ahead of a stop sign at a blind intersection, or getting cut off by another car in dense traffic, they said.

A low threshold for unsafe driving, followed by a verbal warning, is a familiar experience to anyone who's ever taken Driver's Ed. What's unfamiliar to those unemployed by Amazon, however, is the scoring the company generates weekly based on this Driveri data. These scores are used internally to determine if a driver is eligible for bonuses or prizes for their performance, and drivers feel they've been unfairly impacted.

Many of these errors seem to be the fault of improperly trained AI image recognition. An Amazon driver in Oklahoma told Motherboard that a large percentage of erroneous violations come from stop signs:

"Either we stop after the stop sign so we can see around a bush or a tree and it dings us for that, or it catches yield signs as stop signs. A few times, we've been in the country on a dirt road, where there's no stop sign, but the camera flags a stop sign."

An AI is only as good as the data on which it's trained, and these systems seem to need considerably more training before they're ready for prime time. While tech companies may love to address problems with machine learning, it's worth taking the time to remember the imperfections that exist in their algorithms. When people's paychecks hang in the balance, a rush to automate rarely ends well.